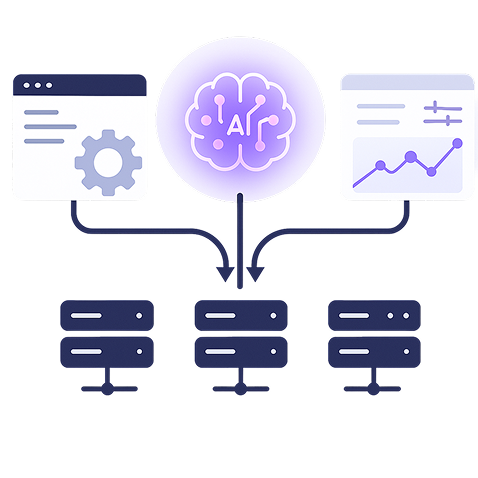

How It Works

Operationalize LLMs with Speed and Precision

From fine-tuning to monitoring—end-to-end LLM lifecycle management for enterprise.

Fine-Tune or Prompt Engineer

Customize foundation models to your business use case with prompt templates or fine-tuning options.

Build Secure Pipelines

Create CI/CD pipelines for model updates, A/B testing, and rollback with role-based access controls.

Monitor and Govern

Get real-time insights into latency, token usage, failure rates, and compliance metrics.

Scale Across Teams

Enable secure, controlled access to LLMs across departments with usage limits and analytics.